1. 前言

本文使用飛槳(PaddlePaddle)復現卷積神經網路ResNet,本文ResNet復現代碼比PaddlePaddle官方內置ResNet代碼結構更加清晰,建議參考本文中ResNet復現代碼了解ResNet模型搭建流程,

本人全部文章請參見:博客文章導航目錄

本文歸屬于:經典CNN復現系列

前文:GoogLeNet

2. ResNet

2013年,Lei Jimmy Ba和Rich Caurana在Do Deep Nets Really Need to be Deep?一文中分析了深度神經網路,并從理論和實踐上證明了更深的卷積神經網路能夠達到更高的識別準確率,

將深層網路增加的層變成恒等映射,原淺層網路層權重保持不變,則深層網路可獲得與淺層網路相同的性能,即淺層網路的解空間是深層網路解空間的子集,深層網路的解空間中至少存在不差于淺層網路的解,

2015年,ResNet的作者何愷明等人首先發現隨著網路疊加更多的層,訓練一個相對淺層的網路,在訓練集和測驗集上均比深層網路表現更好,而且是在訓練的各個階段持續表現的更好,即疊加更多的層后,網路性能出現了快速下降的情況,

訓練集上的性能下降,可以排除過擬合,Batch Normalization層的引入也基本解決了Plain Network的梯度消失和梯度爆炸問題,這種神經網路的“退化”現象反映出結構相似的但深度不同模型,其優化難度是不一樣的,且難度的增長并不是線性的,越深的模型越難以優化,

神經網路“退化”問題有兩種解決思路:一種是調整求解方法,比如更好的初始化、更好的梯度下降演算法等;另一種是調整模型結構,讓模型更易于優化(改變模型結構實際上是改變了Error Surface的形態),

ResNet是2015年ImageNet比賽的冠軍,其將ImageNet分類Top-5錯誤率降到了3.57%,這個結果甚至超出了正常人眼識別的精度,ResNet從調整模型結構方面入手,解決神經網路“退化”問題,

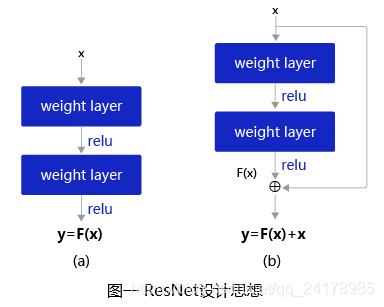

將堆疊的幾層Layer稱之為一個Block,對于某個Block,可以表示的函式為

F

(

x

)

F(x)

F(x),該Block期望的潛在映射為

H

(

x

)

H(x)

H(x),ResNet提出與其讓

F

(

x

)

F(x)

F(x) 如圖一(a)所示直接學習潛在的映射

H

(

x

)

H(x)

H(x),不如如圖一(b)所示去學習殘差

H

(

x

)

?

x

H(x)?x

H(x)?x,即將

F

(

x

)

F(x)

F(x)定義為

H

(

x

)

?

x

H(x)?x

H(x)?x,這樣處理可使得原本的前向路徑變成

F

(

x

)

+

x

F(x)+x

F(x)+x,即用

F

(

x

)

+

x

F(x)+x

F(x)+x來擬合

H

(

x

)

H(x)

H(x),ResNet作者何凱明等人認為這樣處理可使得模型更易于優化,因為相比于將

F

(

x

)

F(x)

F(x)學習成恒等映射,讓

F

(

x

)

→

0

F(x)\rarr0

F(x)→0要更加容易,在網路進行訓練時,如果經過某卷積層并不能提升性能(甚至因為網路“退化”而降低性能),那么網路就會傾向于通過更新權重引數使

F

(

x

)

F(x)

F(x)計算結果趨近于0,那么相應層的輸出就近似為輸入

x

x

x,也就相當于網路計算“跨過了”該層,從而通過這種跨層連接緩解網路退化現象,

2.1 殘差塊(Residual Block)

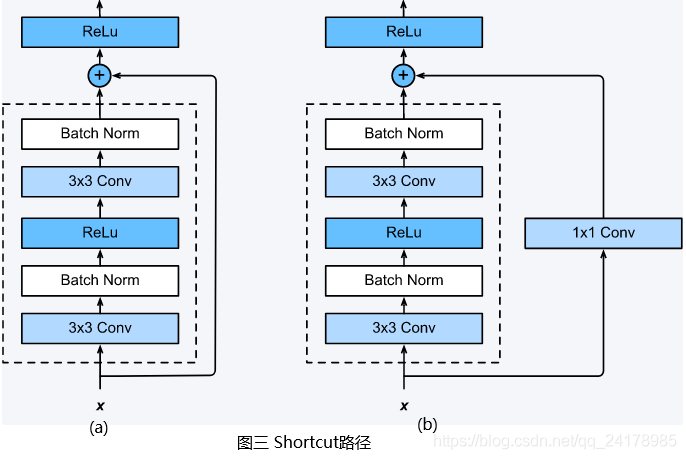

殘差塊是殘差網路(ResNet)的基礎,多個相似的殘差塊串聯構成ResNet,如圖二所示,一個殘差塊有2條路徑

F

(

x

)

F(x)

F(x)和

x

x

x,

F

(

x

)

F(x)

F(x)路徑擬合殘差,被稱為殘差路徑,

x

x

x路徑為恒等映射(Identity Mapping),被稱為Shortcut,輸入

x

x

x通過跨層連接,能更快的向前傳播資料,或者向后傳播梯度,

殘差塊共分為兩種,一種如圖二(b)所示包含瓶頸結構(Bottleneck),Bottleneck主要用于降低計算復雜度,輸入資料先經過1x1卷積層減少通道數,再經過3x3卷積層提取特征,最后再經過1x1卷積層恢復通道數,該種結構像一個中間細兩頭粗的瓶頸,所以被稱為Bottleneck,另一種如圖二(a)所示沒有Bottleneck,被稱為Basic Block,Basic Block由2個3×3卷積層構成,Bottleneck Block被用于ResNet50、ResNet101和ResNet152,而Basic Block被用于ResNet18和ResNet34,

Shortcut路徑也分為兩種,如下圖(a)所示,當殘差路徑輸出與輸入

x

x

x的通道數量和特征圖尺寸均相同時,Shortcut路徑將輸入

x

x

x原封不動地輸出,若殘差路徑輸出與輸入

x

x

x的通道數量或特征圖尺寸不同時,Shortcut路徑使用1x1的卷積對輸入

x

x

x進行降采樣,使得Shortcut路徑輸出與殘差路徑輸出的通道數量和特征圖尺寸均相同,

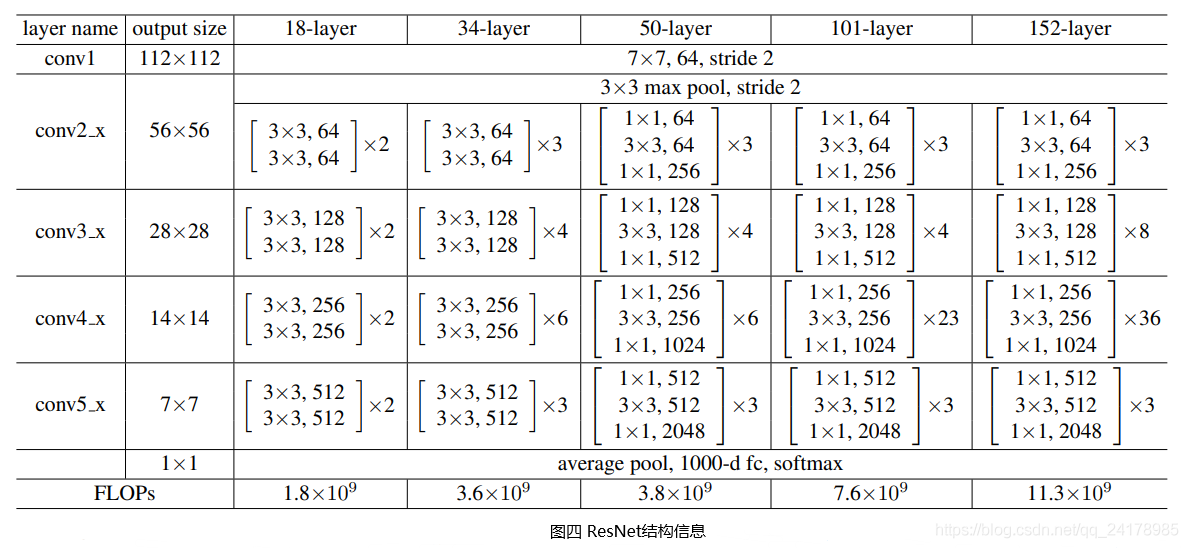

2.2 ResNet網路結構

ResNet由多個Bottleneck Block串聯而成,其通過“跨層連接”的方式,使網路在無法繼續通過增加層數來進一步提升性能時,跳過部分層,這樣能夠大大緩解深層網路“退化”現象,從而實作成百上千層的網路,大大提升了深度神經網路性能,

從上面的ResNet結構資訊圖可知,各種層數配置的ResNet網路的“頭”和“尾”都是相同的,開頭先用一個7×7的卷積層提取輸入圖片的紋理細節特征,最后接一個全域平均池化(GAP,將特征圖降到1×1尺寸)和一個全連接層(對齊輸出維度為分類數),決不同層數配置ResNet的是它們各自包含的殘差塊的種類的數量,ResNet18和ResNet34中的殘差塊為Basic Block,ResNet50、ResNet101和ResNet152中的殘差塊為Bottleneck Block,

3. ResNet模型復現

使用飛槳(PaddlePaddle)復現ResNet,首先定義繼承自paddle.nn.Layer的BasicBlock和BottleneckBlock模塊,具體代碼如下所示:

# -*- coding: utf-8 -*-

# @Time : 2021/8/19 19:11

# @Author : He Ruizhi

# @File : resnet.py

# @Software: PyCharm

import paddle

class BasicBlock(paddle.nn.Layer):

"""

用于resnet18和resnet34的殘差塊

Args:

input_channels (int): 該殘差塊輸入的通道數

output_channels (int): 該殘差塊的輸出通道數

stride (int): 殘差塊中第一個卷積層的步長,當步長為2時,輸出特征圖大小減半

"""

def __init__(self, input_channels, output_channels, stride):

super(BasicBlock, self).__init__()

self.input_channels = input_channels

self.output_channels = output_channels

self.stride = stride

self.conv_bn_block1 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels, kernel_size=3,

stride=stride, padding=1, bias_attr=False),

# BatchNorm2D算子對每一個batch資料中各通道分別進行歸一化,因此須指定通道數

paddle.nn.BatchNorm2D(output_channels),

paddle.nn.ReLU()

)

self.conv_bn_block2 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=output_channels, out_channels=output_channels, kernel_size=3,

stride=1, padding=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

# 當stride不等于1或者輸入殘差塊的通道數和輸出該殘差塊的通道數不想等時

# 需要對該殘差塊輸入進行變換

if stride != 1 or input_channels != output_channels:

self.down_sample_block = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels, kernel_size=1,

stride=stride, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

self.relu_out = paddle.nn.ReLU()

def forward(self, inputs):

x = self.conv_bn_block1(inputs)

x = self.conv_bn_block2(x)

# 如果inputs和x的shape不一致,則調整inputs

if self.stride != 1 or self.input_channels != self.output_channels:

inputs = self.down_sample_block(inputs)

outputs = paddle.add(inputs, x)

outputs = self.relu_out(outputs)

return outputs

class BottleneckBlock(paddle.nn.Layer):

"""

用于resnet50、resnet101和resnet152的殘差塊

Args:

input_channels (int): 該殘差塊輸入的通道數

output_channels (int): 該殘差塊的輸出通道數

stride (int): 殘差塊中3x3卷積層的步長,當步長為2時,輸出特征圖大小減半

"""

def __init__(self, input_channels, output_channels, stride):

super(BottleneckBlock, self).__init__()

self.input_channels = input_channels

self.output_channels = output_channels

self.stride = stride

self.conv_bn_block1 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels // 4, kernel_size=1,

stride=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels // 4),

paddle.nn.ReLU()

)

self.conv_bn_block2 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=output_channels // 4, out_channels=output_channels // 4, kernel_size=3,

stride=stride, padding=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels // 4),

paddle.nn.ReLU()

)

self.conv_bn_block3 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=output_channels // 4, out_channels=output_channels, kernel_size=1,

stride=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

# 如果【輸入】和【經過三個conv_bn_block后的輸出】的shape不一致

# 添加一個1x1卷積作用到輸出資料上,使得【輸入】和【經過三個conv_bn_block后的輸出】的shape一致

if stride != 1 or input_channels != output_channels:

self.down_sample_block = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels, kernel_size=1,

stride=stride, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

self.relu_out = paddle.nn.ReLU()

def forward(self, inputs):

x = self.conv_bn_block1(inputs)

x = self.conv_bn_block2(x)

x = self.conv_bn_block3(x)

# 如果inputs和x的shape不一致,則調整inputs

if self.stride != 1 or self.input_channels != self.output_channels:

inputs = self.down_sample_block(inputs)

outputs = paddle.add(inputs, x)

outputs = self.relu_out(outputs)

return outputs

設定input_channels=64、output_channels=128、stride=2,實體化BasicBlock物件,并使用paddle.summary查看BasicBlock結構:

basic_block = BasicBlock(64, 128, 2)

paddle.summary(basic_block, input_size=(None, 64, 224, 224))

列印BasicBlock結構資訊如下:

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 64, 224, 224]] [1, 128, 112, 112] 73,728

BatchNorm2D-1 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

ReLU-1 [[1, 128, 112, 112]] [1, 128, 112, 112] 0

Conv2D-2 [[1, 128, 112, 112]] [1, 128, 112, 112] 147,456

BatchNorm2D-2 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

Conv2D-3 [[1, 64, 224, 224]] [1, 128, 112, 112] 8,192

BatchNorm2D-3 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

ReLU-2 [[1, 128, 112, 112]] [1, 128, 112, 112] 0

===========================================================================

Total params: 230,912

Trainable params: 229,376

Non-trainable params: 1,536

---------------------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 98.00

Params size (MB): 0.88

Estimated Total Size (MB): 111.13

---------------------------------------------------------------------------

設定input_channels=64、output_channels=128、stride=2,實體化BottleneckBlock物件,并使用paddle.summary查看BottleneckBlock結構:

bottleneck_block = BottleneckBlock(64, 128, 2)

paddle.summary(bottleneck_block, input_size=(None, 64, 224, 224))

列印BottleneckBlock結構資訊如下:

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 64, 224, 224]] [1, 32, 224, 224] 2,048

BatchNorm2D-1 [[1, 32, 224, 224]] [1, 32, 224, 224] 128

ReLU-1 [[1, 32, 224, 224]] [1, 32, 224, 224] 0

Conv2D-2 [[1, 32, 224, 224]] [1, 32, 112, 112] 9,216

BatchNorm2D-2 [[1, 32, 112, 112]] [1, 32, 112, 112] 128

ReLU-2 [[1, 32, 112, 112]] [1, 32, 112, 112] 0

Conv2D-3 [[1, 32, 112, 112]] [1, 128, 112, 112] 4,096

BatchNorm2D-3 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

Conv2D-4 [[1, 64, 224, 224]] [1, 128, 112, 112] 8,192

BatchNorm2D-4 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

ReLU-3 [[1, 128, 112, 112]] [1, 128, 112, 112] 0

===========================================================================

Total params: 24,832

Trainable params: 23,552

Non-trainable params: 1,280

---------------------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 107.19

Params size (MB): 0.09

Estimated Total Size (MB): 119.53

---------------------------------------------------------------------------

定義繼承自paddle.nn.Layer的ResNet類,在__init__方法中定義各模塊,在forward函式中實作網路前向計算流程,具體代碼如下:

class ResNet(paddle.nn.Layer):

"""

搭建ResNet

Args:

layers (int): 表明構建的ResNet層數,支持[18, 34, 50, 101, 152]

num_classes (int): 輸出類別數

"""

def __init__(self, layers, num_classes=1000):

super(ResNet, self).__init__()

supported_layers = [18, 34, 50, 101, 152]

assert layers in supported_layers, \

'Supported layers are {}, but input layer is {}.'.format(supported_layers, layers)

# 網路所使用的【殘差塊種類】、每個模塊包含的【殘差塊數量】、各模塊的【輸出通道數】

layers_config = {

18: {'block_type': BasicBlock, 'num_blocks': [2, 2, 2, 2], 'out_channels': [64, 128, 256, 512]},

34: {'block_type': BasicBlock, 'num_blocks': [3, 4, 6, 3], 'out_channels': [64, 128, 256, 512]},

50: {'block_type': BottleneckBlock, 'num_blocks': [3, 4, 6, 3], 'out_channels': [256, 512, 1024, 2048]},

101: {'block_type': BottleneckBlock, 'num_blocks': [3, 4, 23, 3], 'out_channels': [256, 512, 1024, 2048]},

152: {'block_type': BottleneckBlock, 'num_blocks': [3, 8, 36, 3], 'out_channels': [256, 512, 1024, 2048]}

}

# ResNet的第一個模塊:7x7的步長為2的64通道卷積 + BN + 步長為2的3x3最大池化

self.conv = paddle.nn.Conv2D(in_channels=3, out_channels=64, kernel_size=7, stride=2,

padding=3, bias_attr=False)

self.bn = paddle.nn.BatchNorm2D(64)

self.relu = paddle.nn.ReLU()

self.max_pool = paddle.nn.MaxPool2D(kernel_size=3, stride=2, padding=1)

# 輸入各殘差塊的通道數

input_channels = 64

block_list = []

for i, block_num in enumerate(layers_config[layers]['num_blocks']):

for order in range(block_num):

block_list.append(layers_config[layers]['block_type'](input_channels,

layers_config[layers]['out_channels'][i],

2 if order == 0 and i != 0 else 1))

input_channels = layers_config[layers]['out_channels'][i]

# 將所有殘差塊打包

self.residual_block = paddle.nn.Sequential(*block_list)

# 全域平均池化

self.avg_pool = paddle.nn.AdaptiveAvgPool2D(output_size=1)

self.flatten = paddle.nn.Flatten()

# 輸出層

self.fc = paddle.nn.Linear(in_features=layers_config[layers]['out_channels'][-1], out_features=num_classes)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

x = self.relu(x)

x = self.max_pool(x)

x = self.residual_block(x)

x = self.avg_pool(x)

x = self.flatten(x)

x = self.fc(x)

return x

設定layers=50實體化ResNet50模型物件,并使用paddle.summary查看ResNet50模型結構資訊:

if __name__ == '__main__':

# basic_block = BasicBlock(64, 128, 2)

# paddle.summary(basic_block, input_size=(None, 64, 224, 224))

# bottleneck_block = BottleneckBlock(64, 128, 2)

# paddle.summary(bottleneck_block, input_size=(None, 64, 224, 224))

resnet50 = ResNet(50)

paddle.summary(resnet50, input_size=(None, 3, 224, 224))

列印ResNet50模型結構資訊如下:

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-1 [[1, 3, 224, 224]] [1, 64, 112, 112] 9,408

BatchNorm2D-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 256

ReLU-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 0

MaxPool2D-1 [[1, 64, 112, 112]] [1, 64, 56, 56] 0

Conv2D-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-4 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-4 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Conv2D-5 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-5 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

ReLU-4 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

BottleneckBlock-1 [[1, 64, 56, 56]] [1, 256, 56, 56] 0

Conv2D-6 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-6 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-5 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-6 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-8 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-8 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

ReLU-7 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

BottleneckBlock-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-9 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-9 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-8 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-9 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-11 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-11 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

ReLU-10 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

BottleneckBlock-3 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-12 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768

BatchNorm2D-12 [[1, 128, 56, 56]] [1, 128, 56, 56] 512

ReLU-11 [[1, 128, 56, 56]] [1, 128, 56, 56] 0

Conv2D-13 [[1, 128, 56, 56]] [1, 128, 28, 28] 147,456

BatchNorm2D-13 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-12 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-14 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-14 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Conv2D-15 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072

BatchNorm2D-15 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-13 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-4 [[1, 256, 56, 56]] [1, 512, 28, 28] 0

Conv2D-16 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-16 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-14 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-15 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-18 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-18 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-16 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-5 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-19 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-19 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-18 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-21 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-21 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-19 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-6 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-22 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-22 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-21 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-24 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-24 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-22 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-7 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-25 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072

BatchNorm2D-25 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024

ReLU-23 [[1, 256, 28, 28]] [1, 256, 28, 28] 0

Conv2D-26 [[1, 256, 28, 28]] [1, 256, 14, 14] 589,824

BatchNorm2D-26 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-24 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-27 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-27 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Conv2D-28 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288

BatchNorm2D-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-25 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-8 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0

Conv2D-29 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-29 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-26 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-30 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-30 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-27 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-31 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-9 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-32 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-32 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-29 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-33 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-33 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-30 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-34 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-10 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-35 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-35 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-32 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-36 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-36 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-33 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-37 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-11 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-38 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-38 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-35 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-36 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-40 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-12 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-41 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-41 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-38 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-42 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-42 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-43 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-43 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-13 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-44 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288

BatchNorm2D-44 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048

ReLU-41 [[1, 512, 14, 14]] [1, 512, 14, 14] 0

Conv2D-45 [[1, 512, 14, 14]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-45 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-42 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-46 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-46 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Conv2D-47 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152

BatchNorm2D-47 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

ReLU-43 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

BottleneckBlock-14 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0

Conv2D-48 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-48 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-44 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-49 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-49 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-45 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-50 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-50 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

ReLU-46 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

BottleneckBlock-15 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-51 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-51 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-47 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-52 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-52 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-48 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-53 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-53 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

ReLU-49 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

BottleneckBlock-16 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AdaptiveAvgPool2D-1 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0

Flatten-1 [[1, 2048, 1, 1]] [1, 2048] 0

Linear-1 [[1, 2048]] [1, 1000] 2,049,000

===============================================================================

Total params: 25,610,152

Trainable params: 25,503,912

Non-trainable params: 106,240

-------------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 286.57

Params size (MB): 97.69

Estimated Total Size (MB): 384.84

-------------------------------------------------------------------------------

ResNet50中包含A、B、C、D四種殘差塊的數量分別是3、4、6、3,每個殘差塊有三個卷積層,所以殘差塊里一共有(3+4+6+3)×3=48層網路,再加上開頭的7×7卷積層和最后的全連接層,整個ResNet網路共50層,所以被稱為ResNet50,

4. 參考資料鏈接

- https://arxiv.org/pdf/1312.6184v1.pdf

- https://arxiv.org/pdf/1512.03385.pdf

- https://aistudio.baidu.com/aistudio/projectdetail/2299651

- https://aistudio.baidu.com/aistudio/projectdetail/2270457

- https://www.cnblogs.com/shine-lee/p/12363488.html

- https://github.com/PaddlePaddle/Paddle/blob/release/2.1/python/paddle/vision/models/resnet.py

轉載請註明出處,本文鏈接:https://www.uj5u.com/qita/295757.html

標籤:其他

上一篇:OpenCV(26)影像分割 -- 距離變換與分水嶺演算法(硬幣檢測、撲克牌檢測、車道檢測)

下一篇:藍牙耳機/音響開發總結